Data Lakehouse Architecture

Data lakehouse architecture revolutionizes data management and analysis for organizations. It eliminates data sprawl, traditionally caused by fragmented and siloed data across systems. By adopting this architecture, organizations can effectively reduce data sprawl, combining the scalability and cost-effectiveness of a data lake with the performance and reliability of a data warehouse. This provides enhanced flexibility in data management and analysis.

One key advantage of a data lakehouse architecture is real-time data ingestion. This allows organizations to capture and process data as it is generated, enabling immediate insights and timely actions. Real-time ingestion facilitates quick decision-making and a competitive edge in today's fast-paced business environment.

Embracing this change now is crucial for several reasons. Firstly, it saves time and money by centralizing data storage and eliminating data movement between systems. This streamlines data management processes, reduces complexity, and accelerates analytics workflows, resulting in significant time and cost savings.

Additionally, a data lakehouse architecture enables self-service analytics. Business users and data analysts can directly access and analyze data without heavy reliance on IT or data engineering teams. Empowering users with self-service analytics enhances agility, encourages exploration and discovery, and drives innovation and better decision-making throughout the organization.

Why Customers Consider a Data Lakehouse Architecture

The multiple copies of data is driving up costs.

They have to change their architecture every time they get a new tool.

Legacy data warehouse (like SAP Hana or Teradata) is slow.

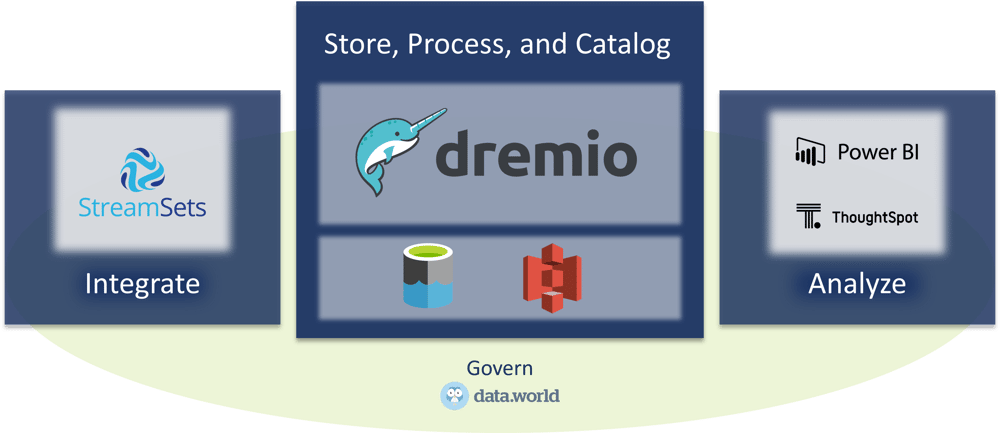

Data Integration

Source to Lake

When building a lakehouse, the first step is to determine which lake you will build it on, whether that is Amazon S3 or Azure Data Lake. It is usually determined by your cloud service provider.

SME's technology partner, StreamSets, is used to automatically connect to the databases, file systems, or other data sources and stream the data to the lake as it becomes available.

The partnership between StreamSets and SME makes DataOps the foundation for a business intelligence ecosystem with a single, fully managed, end-to-end platform for smart data pipelines.

Data Storage, Processing, and Cataloging

Lake to Query

Rather than needing to move particular data to different data silos for different use cases, the lakehouse brings the query engine directly to the lake to build the curated, virtual data sets without the need to double, triple, etc storage.

Once the data is in the lake, Dremio is the lakehouse that can be connected to the data lake or other data sources for the federation aspect. Dremio can be the primary query engine for your data.

As data grows into the terabyte or petabyte range, the lakehouse model outperforms from a cost perspective because there is only one copy of the data. And because the data only needs to be moved once, this makes real time and streaming data ingest more realistic and efficient.

-1.png)

Query to Insights

Experience the power of seamless Single Sign-On (SSO) integrations with Business Intelligence (BI) tools, enabling rapid queries directly on the cloud data lake. Gain insights in milliseconds with responsive interactions from leading BI tools such as Power BI and ThoughtSpot.

Accelerate your path to valuable insights by transitioning from the traditional days or weeks-long process to just mere minutes. With our simplified, no-copy data architecture, paired with high-performance BI dashboards and interactive analytics directly accessible on the SQL lakehouse, you can unlock actionable intelligence at lightning speed.

Unified Access and Simplified Governance

The data lakehouse boasts a vertically integrated semantic layer, a single point of access that simplifies data management for all users and tools within the organization. This layer acts like a central translator, offering several key benefits:

- Shared business logic and KPIs: Everyone uses the same definitions and calculations for metrics, ensuring consistent and reliable insights across departments.

- Unified data access: Users access data through a single interface, eliminating the need to navigate various sources and reducing the risk of inconsistencies.

- Enforced security and governance: Security policies and access controls are applied at this central layer, ensuring data compliance and minimizing the risk of unauthorized access or manipulation.

This approach provides a single, consistent view of the data for all stakeholders, regardless of their technical expertise or the tools they use. This fosters improved collaboration, faster decision-making, and mitigates the challenges associated with complex data governance, compliance, and agility.

For tackling complex data governance, we recommend tools like data.world, which aid in cataloging and managing data assets effectively. Learn more about agile data governance approach.

.png?width=700&height=127&name=Blue%20Mantis%20formerly%20known%20as%20SME%20Solutions%20Group%2c%20Inc.%20(GREEN).png)